Problem to Solve: How can we best identify and mitigate Denial of Service (DoS) Attacks?

Everyday, service providers and large enterprises are victims of denial of service (DoS) attacks. Over the last few years, Sony and Microsoft couldn’t deliver gaming services to their customers during the holidays due to relatively simple attacks. So why couldn’t these two powerhouse companies completely protect themselves?

Everyday, service providers and large enterprises are victims of denial of service (DoS) attacks. Over the last few years, Sony and Microsoft couldn’t deliver gaming services to their customers during the holidays due to relatively simple attacks. So why couldn’t these two powerhouse companies completely protect themselves?

Denial of service, which is exactly what it says it is, can be triggered through simple or sophisticated tools and techniques. It’s not that easy to avoid attacks, especially when attackers know the services you deliver. However, attacks can be stopped quickly with limited damage if you have the right tools and architecture to identify the problem, collect the facts, and stop the bleeding.

A denial of service attack can get scary quickly when multiple (or even thousands) of computers act together to bring down a service, which is called a distributed denial of service (DDoS) attack, in a matter of seconds. Collectively using different methods, these computers focus on the same server or servers to exhaust resources and deliberately take them down.

The network electronics in the path are also negatively affected such as firewalls, switches, routers, and load balancers. What was a revenue generating service is now no more and your IT staff is scrambling to get the “lights back on” and customers happy again. All vendors in the path are called in to help and different strategies are discussed to mitigate the problem, which takes time.

Disaster Recovery Methods

What would be an excellent architecture to sustain vital technology infrastructure as well as quickly restore services after you become a victim? Network and application architectures need fundamental protection and disaster recovery methods.

- The first method is to create layers of protection before you reach the “crown jewels” of what the business is delivering. These layers are to be able to stop and protect volumetric, protocol, as well as advanced application layer attacks.

- The second method is to create flexibility in the architecture so that you can take on different types of attacks through those layers. You need an elastic architecture to ensure that your services are running no matter if you need an inline, out-of-band, or hosted cloud mitigation service.

- The third method is to be able to learn from what happened and I call this “The Save Our Sanity” method. Attacks ALWAYS happen and they change every minute. Visibility into the traffic that is traversing the network 24/7/365 is going to save the sanity. Telemetry methods such as collecting flow exports from routers, logs from logging servers, as well as using taps will help collect the data so the business can dig in and learn from it.

- The fourth method is to have a sophisticated software engine that can help IT staff quickly identify what is happening, where to laser focus, and even trigger mitigation. Telemetry methods, as described above, feeds the software engine. The engine can help automate your ability to check for “normal” behaviors against anomalies and quickly bring the lights back on.

Consider This Scenario

When attacked by a SYN flood against your web server environment last year leading to bad social media reports and $1M in potential loss in revenue, you as the Lead Architect were called in and asked to come up with a solution. You recently followed the fundamental methods described above to arm the business and provide astronomical percentage decreases in attack identification and resolution.

This time, right before school starts in August, you were sitting at your desk and your pager went off. The help desk was describing a wave of callers claiming that they are not able to get to the web service that delivers online student loan approvals. Since this service was mission critical to the business the call went right to you, the top layer, for fast resolution.

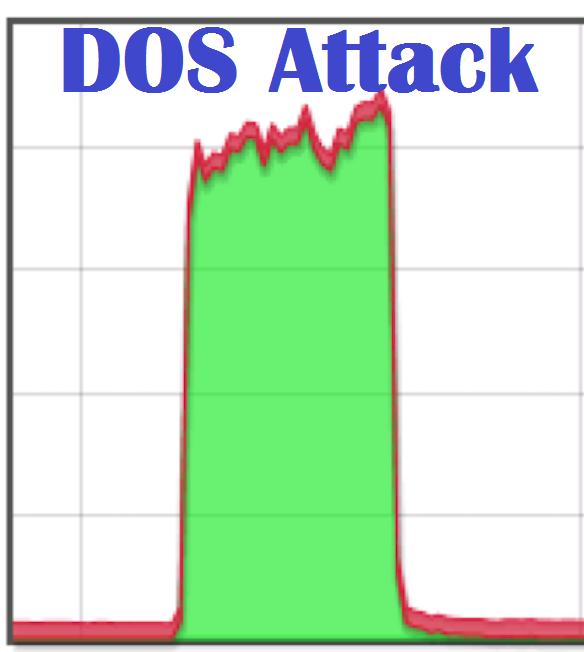

Using the software engine that was put in place you notice that there is increased volume in the network from the edge router to the DMZ core, identified by the dashboard tiles and over-time software graphs, all brought into the engine by taps and intelligent data sources. With a deviation of connection requests by +400% to the web server farm that hosts the loan processing and 100% packet loss on the firewall, you dove in a little deeper into the gigs of traffic.

No protocol level acknowledgements are being sent back by the requesters telling the server farm they were ready to start the loan application process and the source IP addresses are random, and lots of them. This was last year’s trend that you remember so clearly from the months of data collection and analysis.

Since your firewall routes the DMZ network where the load balancer is hosting the VIP Address for the web servers and is first in the path of the attack, you decide to protect the firewall. Because you cannot afford complete downtime, black hole routes are not an option and you need a method in place that doesn’t affect other services.

After a few minutes you deploy the out-of-band edge topology for mitigation. The affected traffic is pulled towards the Cloud provider that you hired for the mitigation, protection is triggered, and the loan application and approval system for college students begins to work again.

Last year it took black hole routes for relief and four hours to identify what was happening. As you identified what was happening you toggled the black hole routes on and off as the attack moved to the DNS infrastructure of the business causing more damage. This time around though your software engine helped you identify the attack in 30 seconds while you stopped the attack in 5 minutes. That is a 99.8% decrease in identification time, 97.9% decrease in service outage time, and roughly 95% decrease in potential lost revenues.

In summary, your business will be a victim of DoS, it’s just a matter of time. Do you have a plan in place to get the lights back on when they go out? To ensure that your services are delivered either on premises, off premises, or both (such as Hybrid Cloud) there has to be fundamentally sound methods in place to protect the business.