Problem to Solve: My company has a lot of Network Attached Storage (NAS) devices in the environment. How can I best triage a performance throughput issues?

GUEST BLOGGER – Mark Fink, Network Engineer with 20+ years experience

A vexed customer called me one afternoon for my advice on what he was sure was a network issue, but he was at his wit’s end figuring it out. The facts of the case:

- He was copying large video files from a Windows server to a NAS server, over NFS.

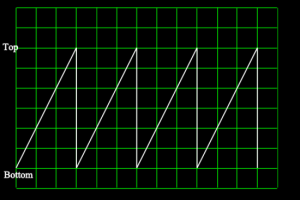

- When he copied a file using Windows Explorer, the utilization graph that appears during the copy would ramp up then abruptly drop down to near 0, then work its way back up again, and repeat, creating a saw tooth pattern.

He had spent (who knows how much) time running iperf, which pushed a steady 900+ Mbps data stream between the two hosts. And he had disabled Windows TCP auto-tuning settings, and tweaked a variety of settings between the NIC and Windows TCP stack, in the mistaken belief that these were to blame. All to no avail; in fact, it served only to worsen the throughput overall. He achieved best results simply putting Windows back to default.

But still, we continued to see the persistent saw-tooth graph. And his conviction was that this was a ‘mysterious network’ issue.

The best place to begin for any performance triage exercise of this sort is to analyze the packet stream between the two hosts. Thankfully, he had a packet capture appliance on the scene and we were able to perform some quick analysis on these voluminous 5 and 6GB file copies.

The analysis revealed zero window events occurring regularly through the transfer, from the NAS server to the Windows server. And I was expecting this result given the utilization graph – the saw-tooth pattern is a telltale sign of this behavior. The point of the trace was to verify that was happening. There were no other issues seen: no retransmissions or duplicate ACKs, which are indicators of packet loss.

So what causes Zero Window Events?

A zero window, in this case, was the NAS server’s way of telling the Windows server, “Hey, I’m not keeping up with data flow here, please stop for a moment.” So the question turned to why was the NAS server not keeping up? Particularly over a Gigabit connection. We should be able to push a steady Gigabit stream of data between the two, no sweat – particularly because these were new server-class machines with current OS’s and both on the same local Gigabit LAN. And iperf proved this out also.

So I advised the next step of looking at CPU and memory resources on both hosts during a copy, which for me is a routine next step when I see zero windows. Even so, I was skeptical this would reveal anything because the customer said they had a hardware RAID controller in the NAS, and we were merely copying a file and nothing else that should hit the CPU. However, much to everyone’s surprise, including my own, the CPU on the NAS was pegged at 100% during the copy! How could that be? It got me asking questions about the NAS server including more details about the storage configuration.

Turns out, while they were using a SAS controller for the array, they configured it in JBOD mode (versus RAID mode) and were using ZFS, a sophisticated pooling and checksumming file system, to stripe data across the disks. To add to it, ZFS supports full-time gzip compression, and they had enabled it. (I’m making a very long story short with that sentence, but we did eventually learn about the gzip compression).

Abracadabra!

So we then had a pretty good idea about why CPU might be at 100%, but to test it out, we disabled compression. Abracadabra! No more zero windows and a nice steady 110-112MB/s in the Windows file copy graph (which is just shy of 900Mbps). We re-enabled compression and the problem returned. So compression was the culprit. Coincidentally, using ZFS to stripe data instead of hardware RAID was ok; it kept up fine.

So the resolution was to 1 – live with it, 2 – disable compression, or 3 – throw more CPU at it to keep up with compression on the data flow. A quick look at ZFS compression stats showed they were getting near-negligible benefit from the gzip compression anyway, so they chose to disable it.

This experience clued me in to the fact that zero windows can be an indicator of a storage issue. Fundamentally, a zero window indicates that the host isn’t keeping up with the data flow (in other words, it’s a host issue, not a network issue) and storage configuration or malfunction can certainly be a reason for that. In this case there was no malfunction; the NAS server was just compressing very large files as they copied over, and it couldn’t keep up with a Gigabit stream.

Things To Think About

- Seen these types of issues in your network?

- Have any best practice methods that you have used to troubleshoot NAS issues?